Pytorch基础

Pytorch基础

- 前言

本笔记写于2025年3月底,同步于Bilibili课程 PyTorch深度学习快速入门教程(绝对通俗易懂!) 。

相关工具使用介绍

torch.utils.data.Dataset

1 | from torch.utils.data import Dataset |

在PyTorch中读取数据主要设计两个类:Dataset 和 Dataloader

-

Dataset:

-

提供一种方式去获取数据及其label

-

如何获取每一个数据及其label

-

告诉我们总共有多少的数据

-

-

Dataloader:

- 为后面的网络提供不同的数据形式

torch.utils.tensorboard

1 | from torch.utils.tensorboard import SummaryWriter # 导入SummaryWriter |

1 | # add_scalar |

1 | # add_image |

打开Tensorboard日志文件:

1 | tensorboard -logdir="logs" |

-

在 Command Prompt 中输入,注意括号中的环境名称为 pytorch 所在环境

-

多次生成 tag 相同的日志可能会出错,需要将多余的日志删除

torchvision.transforms

tensor(张量)类型

transforms如何使用,怎么转为tensor?

1 | from torch.utils.tensorboard import SummaryWriter |

为什么要用tensor类型?

- tensor中包含了很多神经网络中需要的参数

transforms中的一些工具的使用

1 | from PIL import Image |

torchvision.dataset

与 torch.utils.data 中的 Dataset 类似*(实际上是 torch.utils.data.Dataset 的一个子类)*

1 | import torchvision |

torchvision.datasets 使用方法(以CIFAR10为例)

1 | torchvision.datasets.CIFAR10(root: Union[str, Path], train: bool = True, transform: Optional[Callable] = None, target_transform: Optional[Callable] = None, download: bool = False) |

torch.utils.data.Dataloader

1 | import torchvision |

注意!Dataloader返回的imgs要用 SummaryWriter.add_images() 展示,不是add_image()

1 | torch.utils.data.DataLoader(*dataset*, *batch_size=1*, *shuffle=None*, *sampler=None*, *batch_sampler=None*, *num_workers=0*, *collate_fn=None*, *pin_memory=False*, *drop_last=False*, *timeout=0*, *worker_init_fn=None*, *multiprocessing_context=None*, *generator=None*, ***, *prefetch_factor=None*, *persistent_workers=False*, *pin_memory_device=''*, *in_order=True*) |

神经网络 torch.nn

nn == neural network

基本骨架 (Containers)

torch.nn.Module

1 | import torch |

torch.nn.Sequential

- 类似于 torchvision.transfomrs.Compose() ,能够把很多的操作结合在一起

- 让代码整洁方便

1 | import torch |

卷积层 (Convolution Layers)

卷积操作

1 | import torch |

|

|

|

|

|---|---|---|---|

| stride=1, padding=0 | stride=1, padding=2 | stride=2, padding=0 | stride=2, padding=1 |

torch.nn.Conv2d()

[官方文档](Conv2d — PyTorch 2.6 documentation)

1 | classtorch.nn.Conv2d(in_channels, out_channels, kernel_size, stride=1, padding=0, dilation=1, groups=1, bias=True, padding_mode='zeros', device=None, dtype=None) |

1 | import torch |

池化层 (Pooling layers)

torch.nn.MaxPool2d()

1 | torch.nn.MaxPool2d(kernel_size, stride=None, padding=0, dilation=1, return_indices=False, ceil_mode=False) |

空洞卷积 —— kernel相互之间隔开

1 | import torch |

为什么要最大池化?

可以在保留原数据特征的情况下,大大减小数据量,提高计算速度

1 | # 对图片进行最大池化 |

最大池化后的图片,直观感受就是打了马赛克

Padding层 (Padding Layers)

就是之前讲的padding边缘填充,几乎用不到

非线性激活 (Non-linear Activations)

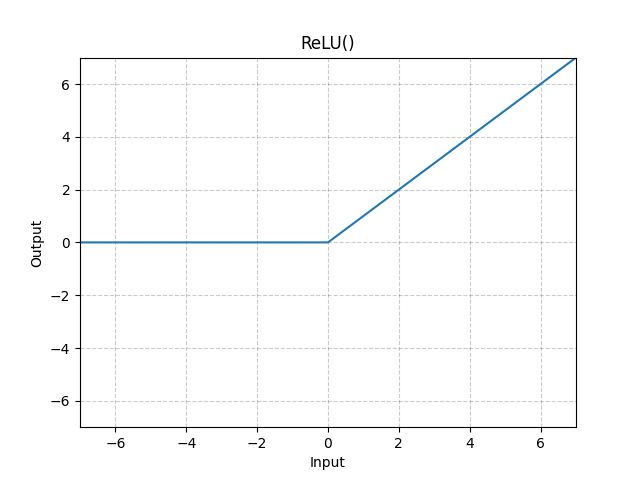

torch.nn.ReLu()

- 把输入数据负数归零,正数不变

1 | import torch |

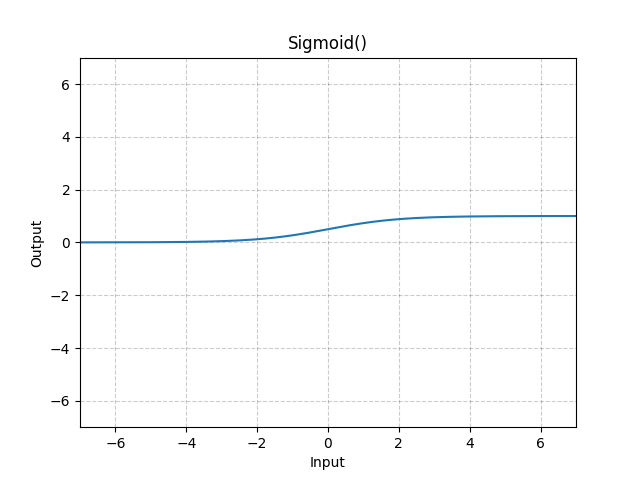

torch.nn.Sigmoid()

1 | from torch import nn |

几个非线性函数用法基本一致,只是函数本身公式不同

线性层 (Linear Layer)

1 | import torch |

其他不常用的层

正则化层 (Normalization Layers)

- 可以加快神经网络的训练速度

Recurrent Layers

Transformer Layers

Dropout Layers

- 随机地将输入中的某些数据置零,防止一些过拟合

Sparse Layers

- 主要用于自然语言处理

损失函数 (Loss Function)

-

作用:

-

计算实际输出和目标之间的差距

-

为我们更新输出提供一定的依据**(反向传播)**

-

torch.nn.L1Loss()

- 计算输入与目标只差的绝对值的平均值或总和

1 | import torch |

torch.nn.MSELoss()

- 计算差值平方平均数或平方和

1 | import torch |

MSELoss()中的 reduction 参数同 L1Loss()

torch.nn.CrossEntropyLoss()

CrossEntropyLoss —— 交叉熵

- 计算方法比较复杂,具体见官方文档

1 | import torch |

- 主要用于分类问题中,进行反向传播

1 | import torchvision |

优化器 (torch.optim)

1 | # ...... |

使用网络模型

现有网络模型的使用及修改

以 VGG16 为例

1 | import torch |

VGG16 网络模型最后一步是一个线性层,输入4096,输出1000,说明该模型是分类1000类的模型。

我们的 CIFAR10 数据集只有10个类别,怎样将 VGG16 应用于 CIFAR10 数据集呢?

1 | # 方法1 |

模型的保存与加载

保存

1 | import torchvision |

方式2为官方推荐,相比于方式1体积更小

加载

1 | import torchvision |

- 注意

- 加载方式必须与保存方式对应

- 老版本的Pytorch存在上述陷阱,加载时必须先import,目前版本似乎不需要了

模型训练

完整的模型训练的套路

- 在 model.py 中搭建网络

1 | import torch |

- 在 train.py 中训练网络

1 | import torch |

使用GPU进行训练

- 只有网络模型、损失函数、数据可以转到 GPU 计算,数据集、优化器等不行

- 没有GPU可以使用 Google Colab 进行训练(需科学上网)Bilibili P30

方式1 .cuda()

1 | # 网络模型 |

- 更好的写法,应在调用 .cuda 前判断是否 GPU 可用,例如:

1 | if torch.cuda.is_available(): |

方式2 .to()

1 | # 定义训练设备 |